When BeLinda Berry was in graduate school in 2017, she posed nude for a photographer who was a friend of hers. “I was living on my own, exploring my sexuality and my freedom,” she said. The experience was empowering.

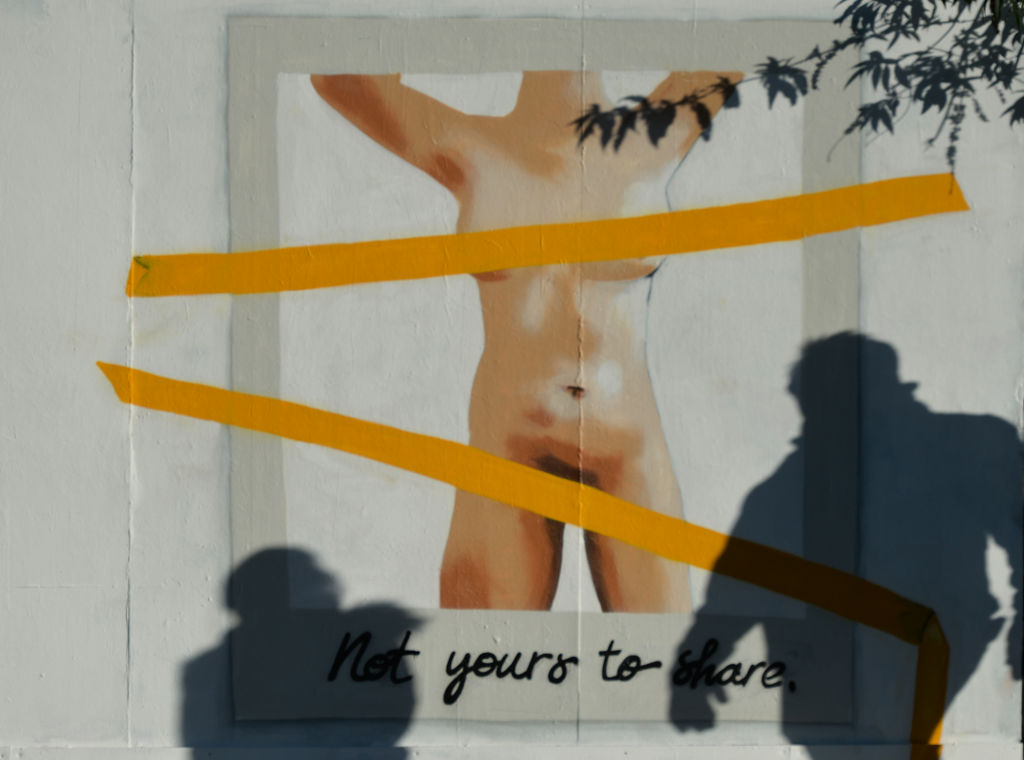

The photographer posted the images in an online portfolio accessible to anyone with the link. Soon after, a girl from her Ohio hometown reached out to tell Berry her images were showing up in invite-only local Discord channels dedicated to “revenge porn,” a misleading term commonly used to describe intimate images shared online without the consent of the subject. Someone also posted the photos, with her name and hometown, to a now-shuttered website called Anon-IB, which trafficked in so-called revenge porn and child pornography. “Suddenly, there were people anonymously posting, ‘Oh, man, I’ve been wanting to see these tits for years. Does anybody have any more?’” she said.

Berry didn’t even try to go to the police. “I didn’t feel like I had a right to make a case because I had the photos taken of me, and I knew to some degree that they would be out in the world,” she said. “There was a lot of internal victim-blaming.”

At the time, Ohio didn’t have a specific law against nonconsensual intimate image sharing. What’s more, Anon-IB was based abroad in the Netherlands. Berry, now an advocate with the nonprofit March Against Revenge Porn, was on her own.

In recent years, as awareness of the issue has risen thanks to high-profile leaks involving celebrities and public figures, many countries and local governments have outlawed the practice. Under pressure from advocates, big online platforms have begun implementing their own policies. But victims and lawyers report the laws just aren’t working. Although the internet has no borders and content travels freely, remedies vary vastly across jurisdictions, and there is very little cross-border cooperation between law enforcement agencies. Meanwhile, the crime—which disproportionately affects women and people who are lesbian, gay or bisexual—is flourishing.

When multiple jurisdictions are involved, even when they have intimate image abuse laws on the books, police will often try to pass the buck, said Honza Cervenka, a lawyer with British-American firm McAllister Olivarius.

“If I’m in Scotland and my perpetrator is in Virginia, I go to my local Scottish police, and they say, ‘No, you have to go to Virginia because that’s where your perpetrator lives.’ I call the perpetrator’s local precinct in Virginia, and they tell me, ‘You’re not here. In order to start a complaint, you need to go to your local police station, and then perhaps we can collaborate on this case with them,’” Cervenka said. “But in reality, I’ve never seen that collaboration be done successfully.”

Globally, regulations overwhelmingly criminalize sharing an individual’s intimate images without their consent, but the specifics differ widely. In some jurisdictions, it’s a sex crime akin to harassment. In others, it is treated as a privacy violation. In New Zealand, a specialized government-approved agency tries to negotiate a settlement between the parties before the case is turned over to the courts. The Philippines is more punitive: Sentences range from a minimum of three years in prison to a maximum of seven years in prison.

One particularly contentious issue when it comes to crafting legislation is the question of intent—whether the person sharing the images wanted to harm the victim. In places like the U.S. state of Ohio, New Zealand, and the United Kingdom, critics say laws fail to bring perpetrators to justice because of the intent language. According to research conducted by advocacy group the Cyber Civil Rights Initiative (CCRI), most people who share intimate images without the subject’s consent are doing so without aiming to hurt the subject.

The disparities are particularly palpable in the United States, where 48 states and the District of Columbia all have their own relevant laws. New York, for example, has an “intent to harm” clause whereas Illinois, which law advocates view as the gold standard, does not.

For victims, these variances mean navigating a morass of different rules. “What would ideally be a simple avenue to get justice becomes a complicated maneuvering exercise between exactly what the intent element is in Minnesota as opposed to what the intent element is defined as in New Hampshire,” Cervenka said. “Many victims just give up.”

One solution, at least in the United States, would be a comprehensive federal law. But thus far, no bill has been successful in Congress (although attempts are still being made).

In the United States, the main disagreement has been around scope. If a law is too narrowly defined, it would apply in only a limited subset of cases, argue victims’ advocates. But if it’s too broad, it risks being overly punitive and silencing speech, say civil rights groups.

In countries with weaker free speech protections and rule of law, this dilemma becomes even starker. “A lot of our concern has been about how the crime is described,” said Erika Smith of Take Back the Tech, a global anti-tech-related violence campaign. “Is it so broad that anything can fit in? Because we know that governments will twist the laws and use them against marginalized groups.”

This kind of misuse has been reported in Pakistan, for example. Similar concerns have been raised about Mexico’s recent law, which includes potential sentences of up to six years in prison. Candy Rodríguez, spokesperson for Acoso. Online, a group that supports victims of online harassment in Latin America, said the law was a sign of progress but an overly punitive stance would disproportionately affect marginalized communities. “It is mostly people of color or the poorest who receive prison sentences in Mexico,” Rodríguez said.

In the absence of adequate protections, victims of nonconsensual intimate image sharing sometimes seek remedies in more established legal territory like privacy or copyright law. The latter is fairly harmonized around the world and allows victims, who often just want their images to disappear from the internet, to more swiftly deal with the problem. After Berry said she held the copyright to her images, Anon-IB took them down. (Generally, copyright law only applies to images that were taken by the victim, such as selfies.)

In the European Union, victims have some recourse with the General Data Protection Regulation, the behemoth online privacy law implemented in 2018. The law includes a “right to erasure,” requiring tech companies to take down content about a person if that person requested it. But the process is complicated and lengthy—not particularly helpful when haste is key. If not removed immediately, intimate images often get downloaded and disseminated broadly.

Although Big Tech companies like Google and Facebook have cracked down on intimate image abuse in recent years, advocates say there’s much more work to be done. Unless they have a copyright claim, victims have to rely on the goodwill of individual platforms to erase their images.

Online platforms are generally protected from being held liable for content posted by their users unless legislators choose to carve out exceptions. The United States, where most Big Tech companies are based, does not make exceptions for intimate image abuse, which critics say makes it very difficult for victims to hold platforms accountable. But Brazil, for example, does.

“That changed things a lot,” said Mariana Valente, a Brazil-based researcher and author of a 2018 report on laws governing intimate image abuse globally. “Brazil has been fairly able to enforce local law with platforms, but it’s taken years. They resisted a lot at the beginning,” Valente said. “This also has to do with the relative importance of the Brazilian market.” Smaller countries might not have as much leverage.

So far, there is little concerted effort on the part of governments or law enforcement agencies to collaborate on these issues.

Ideally, there would be a multilateral regulatory regime with an enforcement arm, said attorney Ann Olivarius of McAllister Olivarius. “There should be an international law. … Not just a treaty or international protocol, but a real law.”

There is precedent for international cooperation on cybercrime. Governments have long been collaborating in the fight against child pornography, for example. But nude adult selfies are a much harder sell. In the end, changing attitudes may be just as important as legal reform.

“We’ve had situations where police officers will gawk at the photos,” said Mary Anne Franks, head of the CCRI and one of the leading advocates for stronger laws to protect victims. “They’ll ogle them. They’ll laugh about them. They’ll pass them around the station. The idea that that law is actually going to be useful for that victim in that situation is a legal fiction. You want to move society to a place where it see this as something very wrong…and that has serious consequences”

Although Berry has managed to have her images taken down and Dutch authorities seized the servers of Anon-IB in 2018, her images are still out there. Every now and then, she’ll get clusters of Facebook friend requests from people she hasn’t spoken to in a decade. “Then I’m like ‘Oh, somebody probably found the photos again,’” she said.

Hanna Kozlowska

Hanna Kozlowska